In my quest for a guilty pleasure with educational value, I turned to the language app Duolingo. And this app taught me a lot. Just not necessarily French – as I realized when in Geneva where I gave the opening keynote at the World Congress of the International Federation of Translators.

Move fast and break things?

A few months ago, Duolingo announced they were replacing human translators with AI. The CEO put it bluntly:

“We’d rather move with urgency and take occasional small hits on quality than move slowly and miss the moment.”

That alone is telling: that anyone could think this 2025 remix of the old “move fast and break things” motto was still legit. Judging from the outcry it sparked, it isn’t.

But how does that AI translation actually look in practice?

Camembert or Pie Charts – The AI Doesn’t Know

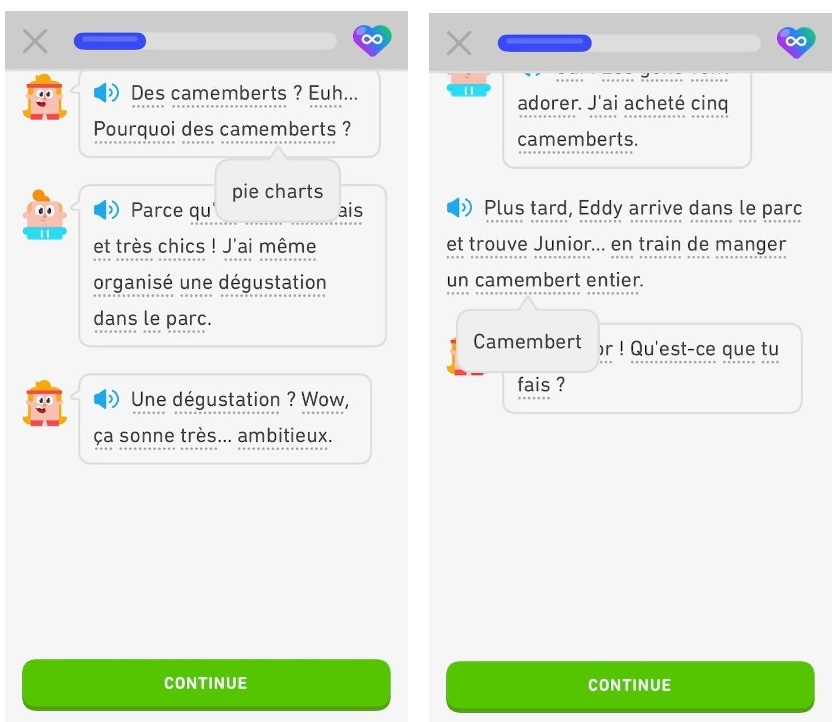

A concrete example from the Duolingo app illustrates the problem vividly:

Step 1: A character proudly announces a “tasting of pie charts” in the park.

Step 2: A few lines later, “camembert” is correctly translated as cheese.

Step 3: Just to be turned into pie charts again.

It’s funny. Almost charming. And not really surprising if you are a large language model and your obsession with statistics shines through.

Yet it illustrates a deeper point: AI translates word by word – with no sense of context, tone, or meaning.

What exactly are we automating?

This little anecdote led me to a broader reflection: what are we really automating when we automate translation?

A helpful way to think about it is to distinguish three types of automation in AI – inspired by the “AI Snake Oil” debate by Arvind Narayanan and Sayash Kapoor.

1. Perception

AI is good at identifying things – recognizing faces or classifying images. These are tasks with a clear right or wrong answer. When there’s enough data, AI often outperforms humans because this is essentially pattern recognition at scale.

(Full disclosure: I’m faceblind. So facial recognition would save me from a lot of embarrassing situations; as long as you let me violate your privacy 😈).

2. Judgment

Things get tricky when we use AI to automate judgment – like grading essays, detecting spam mails, or, voilà: translating complex texts.

In such cases, there is no single right answer. What counts as spam to you might be an attractive offer to me. What reads like a strong essay to one teacher might miss the point for another.

So how could a machine be expected to navigate the nuances of translation?

It’s not just about perception – it depends on judgment: the ability to understand tone, preserve irony, and choose the right cultural reference.

3. Prediction

Finally, many AI systems today claim to predict social outcomes:

Will you repay a loan? Drop out of school? Get divorced? End up in jail? But when it comes to human behavior, these tools often perform only slightly better than chance. We might as well flip a coin – but somehow it sounds more scientific when an algorithm does it.

The Missing Element: Judgment

So, in essence: When we use AI for translation, we reduce language either to perception – something that’s right or wrong. Or, with generative models, we treat language as prediction – something likely or unlikely.

In both cases, we lose what matters most: judgment.

Now: What does that mean in real life? The stakes are small when it’s about camembert versus pie charts.

But they are much higher when the same systems are used in court hearings, education, or healthcare. In these settings mistranslation turns into misjudgment – and this is where the difference between processing language and understanding the world becomes painfully clear.